MIT’s (Massachusetts Institute of Technology) Media Lab is conducting research that facial recognition technology tends to be biased in facial recognition for females and dark-colored skin tone people. Evidence shows that the software has been inaccurate in decision-making due to the way it is programmed to work.

The researcher at MIT Joy Buolamwini has stated that; “I found out that there is a problem with facial- recognition software when I noticed software precisely recognized my face faster and accurate when I have put on the white mask on my face”. Before MIT statement people only made speculations about this issue for dark colored people.

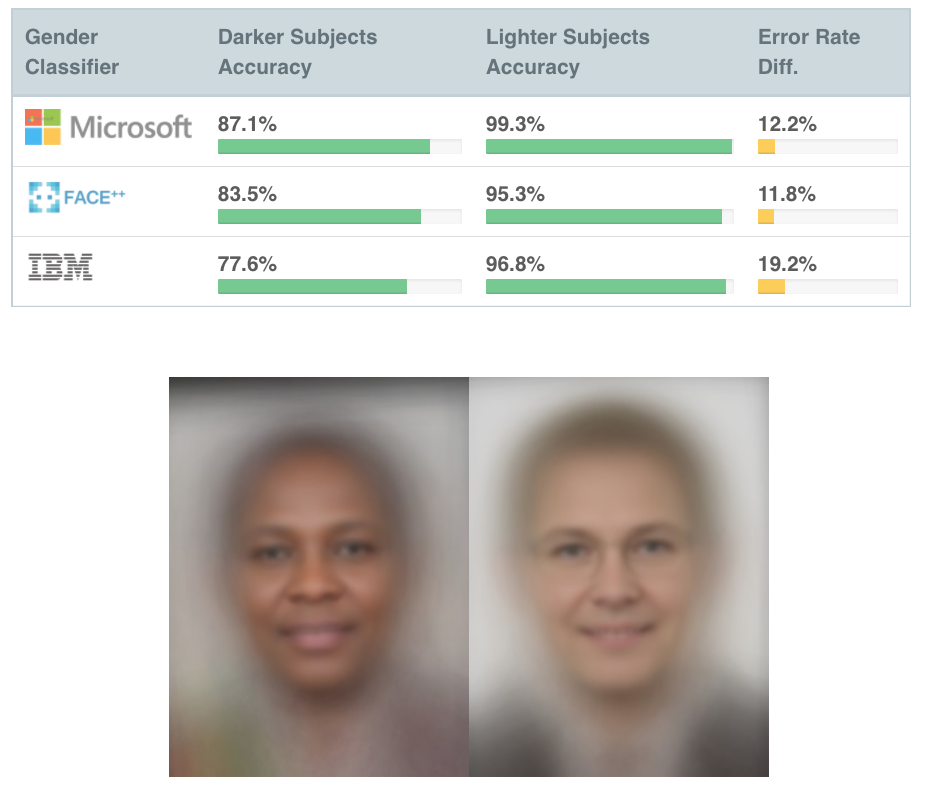

Buolamwini tested three facial recognition systems including; Microsoft, IBM, Megvii of China. All tests clearly showed the inaccurate facial recognition on basis of a person’s skin color and gender; the results were originally reported in The New York Times.

“Overall, male subjects were more accurately classified than female subjects replicating previous findings (Ngan et al., 2015), and lighter subjects were more accurately classified than darker individuals,” Buolamwini wrote in this paper. It shows that software accurately detects white colored men as compared to females or dark-skinned men.

Furthermore, cameras that are deployed for security checks seem to have similar limitations. A lot of innocent people have been suspected of wrongdoings owing to inaccurate results from the camera software.

This is not the first time that a research shows that software is racially discriminating. Back in 2015, Google was called out by a software engineer for mistakenly identifying his black friends as “gorillas”. We are still unsure whether that problem solved or Google just marked off “Gorilla” word from its face-detection algorithm.

It is true that software is neutral and totally relies on its programmers. If the data-set fed into it works fine, we will never face such issues in future.

The post Are Software Algorithms racially biased too? appeared first on TechJuice.